It might be overstated (there’s probably always going to be the “‘bed, bog, bath’ element”) but Mr Billingsley’s comment is almost certainly right: we’re going to be digital advertisers because the world is now digital, and getting more so.

What does all this digital malarkey mean for people looking to get into the communications business and, before we look at that, what does digital mean anyway?

There are two important things there for grads trying to get into the industry. The first is, “whose roof”?

This interactivity let’s you do a lot more than you can at your typical traditional ATL agency. Or to reunite that idea with its owner:

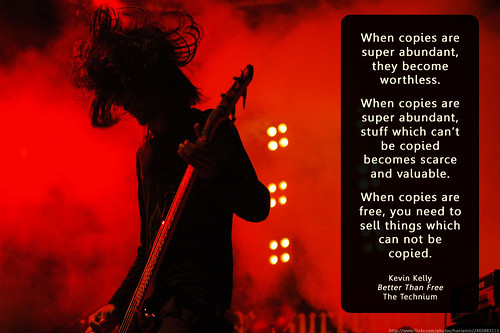

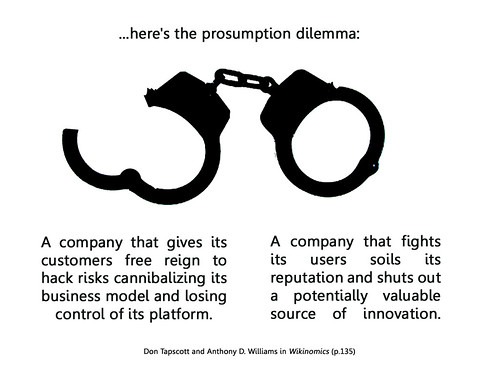

I think that's really exciting (and Mr Tait has 9 more great reasons digital is better for those interested). In digital you’re unshackled from just doing TV, print and radio to all sorts of exciting things like sites, applications, blogs, games, branded content, widgets, podcasts, social things and experimental stuff. And a lot of this (not all) is actually useful to people; it's additive rather than interruptive.

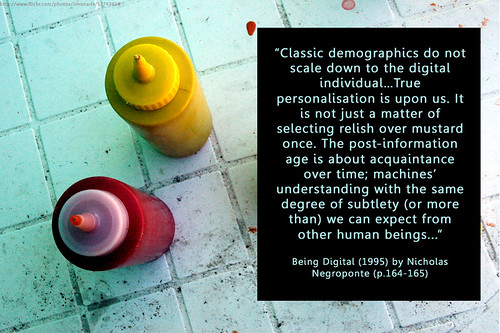

In my experience grads tend to think of digital as something on-the-sidey and techy. Maybe it once was. Now it ain’t. Technology is so ubiquitous, so ‘ready-to-hand’, that it’s becoming invisible and when that happens it gets socially interesting. In other words, technology and culture used to be separate, increasingly they are the same (look what you're doing now.)

It’s a brilliant time to get into an industry that’s only going to grow (even in these tough times) and that’s much more about interesting interactive ideas than it is about tech.

Go on, apply!

Obviously I am biased but this would be a good place to start...

(For those wanting more, I suggest you have a play in here, read this, canoe back up this and maybe watch this. That should be enough to be getting on with.)